-

Bug

-

Resolution: Won't Do

-

Medium

-

None

-

Beijing Release, Casablanca Release

-

None

As we are investigating the bigger problem of OOM ONAP (tested with latest official release Beijing) being unstable in TLAB, we identified some issues that mostly point to TLAB's Disk I/O performance being subpar and not being able to keep up with ONAP deployment in order to start successfully. TLAB's VMs use spinning disks as their HDD alloc space (which is why these problems are not always so apparent in Windriver, since their disks use SSDs instead).

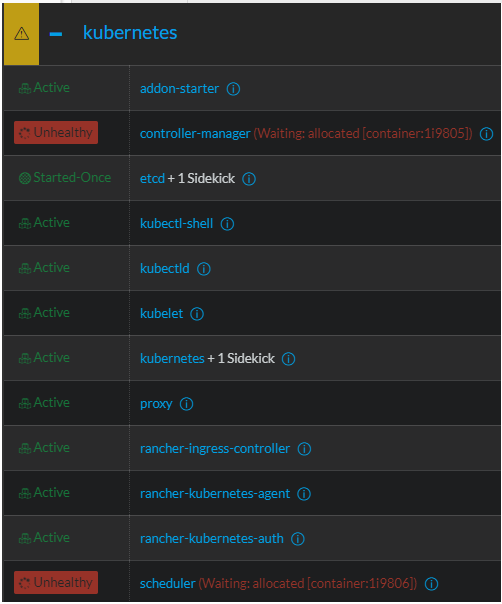

One of the issues of ONAP instability in TLAB is the one addressing this Jira issue. Basically, Rancher-based Kubernetes stack constantly failed to keep the etcd cluster running without failure. After running some tests, it turns out that the specific Kubernetes node where one of the AAI's Cassandra container was running at also had one of the etcd containers running there. The Cassandra container was running a stress.jar process which was taking up basically all of the disk usage and therefore affecting all the containers in that k8s node (inc. the etcd container), leading to very slow disk i/o write/read times and ultimately crashing the etcd component, controller-manager component, and scheduler component (all part of the K8S control plane). If the control plane fails, then all spawned k8s resources will not work correctly or completely fail.

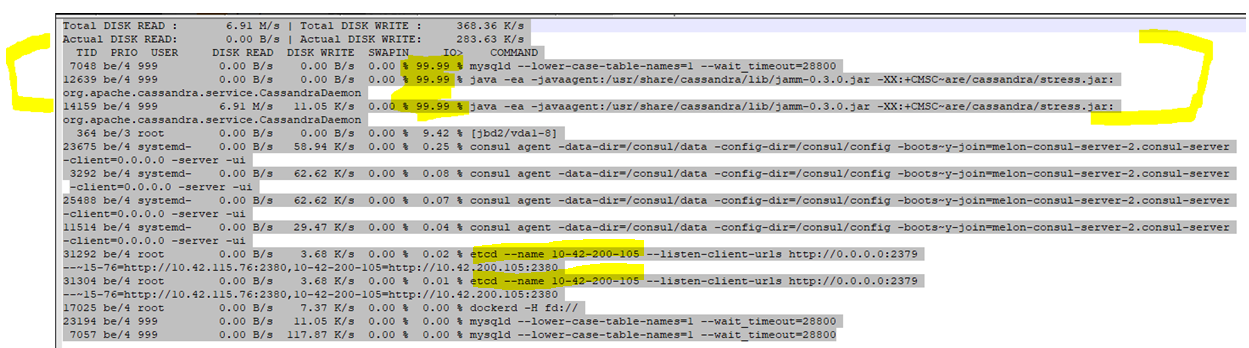

Below is the AAI's stress.jar process coming from the cassandra container that was hogging up all the space from other containers (inc. etcd containers) in that k8s node:

The question is... is there a way to completely disable this stress jar from AAI cassandra containers, or limit the times it is being used?