I am using OOM Jakarta to test Network Slicing use case. When OOF sending plan request to HAS-api pod, it always get timeout after 1 min, since the default timeout setting for has oof-has-api-nginx is 60 seconds. I see in Has-solver log, that it is able to find the solution about 5 mins after oof sending the plan request. Due to the timeout, OOF always return Error messages to so-oof-adapter.

Logs of oof:

st [POST]>, 'connection': <requests.adapters.HTTPAdapter object at 0x7fb823ece550>}] +00:00|2022-07-04T09:36:30.626+00:00|||Thread-2||||||||||DEBUG||||||||||||||Versions set in HTTP header to conductor: X-MinorVersion: 0 +00:00|2022-07-04T09:36:30.663+00:00|||Thread-2||||||||||DEBUG||||||||||||||Sending first Conductor request for request_id 1a7249b4-d7a8-413a-aaac-63aa889f8d2d +00:00|2022-07-04T09:36:30.664+00:00|||Thread-2||||||||||DEBUG||||||||||||||Payload to Conductor: {"name": "1a7249b4-d7a8-413a-aaac-63aa889f8d2d", "files": {}, "timeout": 1800, "num_solution": "1", "template": {"homing_template_version": "2020-08-13", "parameters": {"REQUIRED_MEM": "", "REQUIRED_DISK": "", "customer_lat": 0.0, "customer_long": 0.0, "service_name": "", "service_id": "", "latency": 20}, "demands": {"nst": [{"inventory_provider": "aai", "inventory_type": "nst", "service_type": "", "service_resource_id": "", "unique": "true", "filtering_attributes": {"model-role": "NST"}}]}, "constraints": {"nst_Threshold": {"type": "threshold", "demands": ["nst"], "properties": {"evaluate": [{"attribute": "latency", "operator": "lte", "threshold": {"get_param": "latency"}, "unit": "ms"}]}}}, "optimization": {"goal": "minimize", "operation_function": {"operator": "sum", "operands": [{"function": "attribute", "params": {"attribute": "latency", "demand": "nst"}}]}}}} +00:00|2022-07-04T09:36:30.665+00:00|||Thread-2||||||||||DEBUG||||||||||||||Requesting URL: https://oof-has-api:8091/v1/plans/ +00:00{color:red}|2022-07-04T09:37:30.705+00:00|{color}||Thread-2||||||||||DEBUG||||||||||||||Received response [code: 504, headers: {'Server': 'nginx/1.21.4', 'Date': 'Mon, 04 Jul 2022 09:37:30 GMT', 'Content-Type': 'text/html', 'Content-Length': '167', 'Connection': 'keep-alive'}, data: {'_content': b'<html>\r\n<head><title>504 Gateway Time-out</title></head>\r\n<body>\r\n<center><h1{color:red}>504 Gateway Time-out</{color}h1></center>\r\n<hr><center>nginx/1.21.4</center>\r\n</body>\r\n</html>\r\n', '_content_consumed': True, '_next': None, 'status_code': 504, 'headers': {'Server': 'nginx/1.21.4', 'Date': 'Mon, 04 Jul 2022 09:37:30 GMT', 'Content-Type': 'text/html', 'Content-Length': '167', 'Connection': 'keep-alive'}, 'raw': <urllib3.response.HTTPResponse object at 0x7fb771e56d90>, 'url': 'https://oof-has-api:8091/v1/plans/', 'encoding': 'ISO-8859-1', 'history': [], 'reason': 'Gateway Time-out', 'cookies': <RequestsCookieJar[]>, 'elapsed': datetime.timedelta(seconds=60, microseconds=15599), 'request': <PreparedRequest [POST]>, 'connection': <requests.adapters.HTTPAdapter object at 0x7fb771e562e0>}] +00:00|2022-07-04T09:37:30.744+00:00|||Thread-2||||||||||ERROR|||||||||||errorCode=400,errorDescription=Internal Error|||Error for 1a7249b4-d7a8-413a-aaac-63aa889f8d2d Traceback (most recent call last): File "/opt/osdf/apps/nst/optimizers/nst_select_processor.py", line 57, in process_nst_selection solution = self.get_nst_solution() File "/opt/osdf/apps/nst/optimizers/nst_select_processor.py", line 79, in get_nst_solution conductor_response = self.get_conductor(req_info, requirements, policies, model_name) File "/opt/osdf/apps/nst/optimizers/nst_select_processor.py", line 127, in get_conductor resp = e.response.json() File "/usr/local/lib/python3.9/site-packages/requests/models.py", line 910, in json return complexjson.loads(self.text, **kwargs) File "/usr/local/lib/python3.9/json/__init__.py", line 346, in loads return _default_decoder.decode(s) File "/usr/local/lib/python3.9/json/decoder.py", line 337, in decode obj, end = self.raw_decode(s, idx=_w(s, 0).end()) File "/usr/local/lib/python3.9/json/decoder.py", line 355, in raw_decode raise JSONDecodeError("Expecting value", s, err.value) from None json.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0) +00:00|2022-07-04T09:37:30.745+00:00|||Thread-2||||||||||DEBUG|||||||||||errorCode=400,errorDescription=Internal Error|||Requesting URL: http://so-oof-adapter.onap:8090/so/adapters/oof/callback/v1/NSTSelectionResponse/1a7249b4-d7a8-413a-aaac-63aa889f8d2d +00:00|2022-07-04T09:38:30.857+00:00|||Thread-2||||||||||ERROR|||||||||||errorCode=400,errorDescription=Internal Error|||Error sending asynchronous notification for 1a7249b4-d7a8-413a-aaac-63aa889f8d2d Traceback (most recent call last): File "/opt/osdf/apps/nst/optimizers/nst_select_processor.py", line 65, in process_nst_selection rest_client.request(json=solution, noresponse=True) File "/opt/osdf/osdf/utils/interfaces.py", line 107, in request raise BaseException(res.raise_for_status()) File "/usr/local/lib/python3.9/site-packages/requests/models.py", line 953, in raise_for_status raise HTTPError(http_error_msg, response=self) requests.exceptions.HTTPError: 500 Server Error: for url: http://so-oof-adapter.onap:8090/so/adapters/oof/callback/v1/NSTSelectionResponse/1a7249b4-d7a8-413a-aaac-63aa889f8d2d

Logs of has-solver:

2022-07-04 04:41:51,118|None|140358593964832|INFO|service|conductor.solver.service: [-] plan id: a8d31059-a189-425b-b4b2-1088a6bb83a8 [-] Sovling starts, changing the template status from translated to solving, atomic update response from MUSIC SUCCESS 2022-07-04 04:41:51,119|None|140358593964832|INFO|service|conductor.solver.service: [-] plan id: a8d31059-a189-425b-b4b2-1088a6bb83a8 [-] Plan a8d31059-a189-425b-b4b2-1088a6bb83a8 with request id 1a7249b4-d7a8-413a-aaac-63aa889f8d2d is solving by machine onap-oof-has-solver-677f8645f7-z9cl6. Tried to solve it for 1 times. 2022-07-04 04:42:23,629|None|140358593964832|INFO|service|conductor.solver.service: [-] plan id: a8d31059-a189-425b-b4b2-1088a6bb83a8 [-] Customer Location for Latency Reduction 2022-07-04 04:42:23,630|None|140358593964832|INFO|service|conductor.solver.service: [-] plan id: a8d31059-a189-425b-b4b2-1088a6bb83a8 [-] country is not present is country latency table, looking for * wildcard entry 2022-07-04 04:42:39,887|None|140358593964832|INFO|service|conductor.solver.service: [-] plan id: a8d31059-a189-425b-b4b2-1088a6bb83a8 [-] No '*' wild card entry found in country latency table. No solution will be provided 2022-07-04 04:42:39,888|None|140358593964832|INFO|service|conductor.solver.service: [-] plan id: a8d31059-a189-425b-b4b2-1088a6bb83a8 [-] Done getting Latency Country DB Groups 2022-07-04 04:42:48,053|None|140358593964832|INFO|threshold|conductor.solver.optimizer.constraints.threshold: [-] plan id: a8d31059-a189-425b-b4b2-1088a6bb83a8 [-] Solving constraint nst_Threshold_nst of type 'threshold' for demand - [nst] 2022-07-04 04:43:20,600|None|140358593964832|INFO|service|conductor.solver.service: [-] plan id: a8d31059-a189-425b-b4b2-1088a6bb83a8 [-] Preparing the recommendations 2022-07-04 04:43:36,850|None|140358593964832|INFO|service|conductor.solver.service: [-] plan id: a8d31059-a189-425b-b4b2-1088a6bb83a8 [-] The plan with id a8d31059-a189-425b-b4b2-1088a6bb83a8 is inserted in order_locks table. {color:red}2022-07-04 04:43:53,139{color}|None|140358593964832|INFO|service|conductor.solver.service: [-] plan id: a8d31059-a189-425b-b4b2-1088a6bb83a8 [-] Plan search complete, changing the template status from solving to solved, atomic update response from MUSIC SUCCESS 2022-07-04 04:43:53,140|None|140358593964832|INFO|service|conductor.solver.service: [-] plan id: a8d31059-a189-425b-b4b2-1088a6bb83a8 [-] Plan a8d31059-a189-425b-b4b2-1088a6bb83a8 search complete, {color:red}1 solution(s) found by machine onap-oof-has-solver-677f8645f7-z9cl6{color} 2022-07-04 04:43:53,141|None|140358593964832|INFO|service|conductor.solver.service: [-] plan id: a8d31059-a189-425b-b4b2-1088a6bb83a8 [-] {color:red}Plan name: 1a7249b4-d7a8-413a-aaac-63aa889f8d2d{color}

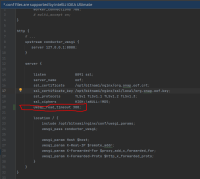

Log for has nginx:

2022/07/04 04:37:30 [error] 31#31: *1435 upstream timed out (110: Connection timed out) while reading response header from upstream, client: 10.1.23.238, server: oof, request: "POST /v1/plans/ HTTP/1.1", upstream: "uwsgi://127.0.0.1:8080", host: "oof-has-api:8091" 10.1.23.238 - admin1 [04/Jul/2022:04:37:30 -0500] "POST /v1/plans/ HTTP/1.1" 504 167 "-" "python-requests/2.26.0"

Based on my test, the response time from has to oof is about 1 min 40 seconds, so I have tried to upgrade the default timeout for nginx and RestClient (the class used to establish the rest query to has) in interfaces.py to 120 seconds. Then the issue has been solved.

Could you please update the code and nginx default settings accordingly? Thanks!

ps: Please ignore the hour inconsistency, it is probably due to the pod timezone setting.